Machine learning models require constant vigilance. In production environments, two critical phenomena can silently degrade model performance: concept drift and data drift.

🎯 Why Understanding Drift Matters in Production ML

Deploying a machine learning model is just the beginning of its lifecycle. Models that perform exceptionally well during testing can quickly deteriorate when facing real-world data. This degradation doesn’t happen randomly—it follows predictable patterns that data scientists must understand to maintain reliable AI systems.

The challenge lies in distinguishing between different types of drift. While both concept drift and data drift signal changes in your data ecosystem, they represent fundamentally different problems requiring distinct solutions. Misdiagnosing one for the other can lead to wasted resources and continued performance degradation.

Organizations lose millions annually due to undetected drift. A recommendation engine that stops converting users, a fraud detection system that misses new attack patterns, or a predictive maintenance model that fails to anticipate failures—all these scenarios stem from drift-related issues that weren’t properly addressed.

🌊 Defining Data Drift: When Your Input Changes

Data drift, also known as covariate shift or feature drift, occurs when the statistical distribution of your input features changes over time. Think of it as the ground beneath your model shifting, even though the fundamental relationships you’re trying to predict remain stable.

Imagine you’ve built a credit scoring model trained on data from 2019. By 2023, average income levels have changed, employment patterns have shifted, and typical debt-to-income ratios look different. The features themselves have evolved, but the underlying relationship between these features and creditworthiness hasn’t fundamentally changed.

Common Causes of Data Drift

Several factors contribute to data drift in production systems. Seasonal variations represent one of the most predictable causes—retail sales data looks dramatically different during holiday seasons compared to regular months. Your features shift naturally with calendar cycles.

Market dynamics also drive data drift. Economic recessions, technological innovations, or regulatory changes can alter the distribution of your input variables without necessarily changing the relationships you’re modeling. A housing price prediction model experiences data drift when interest rates fluctuate significantly.

Operational changes within your organization can introduce drift too. If you expand into new geographic markets, change your customer acquisition strategy, or modify your product offerings, the incoming data profile will shift accordingly.

Detecting Data Drift Effectively

Statistical tests provide the foundation for data drift detection. The Kolmogorov-Smirnov test compares distributions of continuous features, while the Chi-square test works well for categorical variables. These tests quantify how much your current feature distributions diverge from your training data baseline.

Population stability index (PSI) offers another powerful metric. It measures the shift in variable distribution between two samples, with values above 0.2 typically indicating significant drift requiring attention. Many teams calculate PSI daily or weekly for critical features.

Visual monitoring through distribution plots and histograms helps teams quickly identify drift patterns. Overlaying current feature distributions against training distributions makes shifts immediately apparent to human observers, complementing automated statistical tests.

🔄 Understanding Concept Drift: When Relationships Evolve

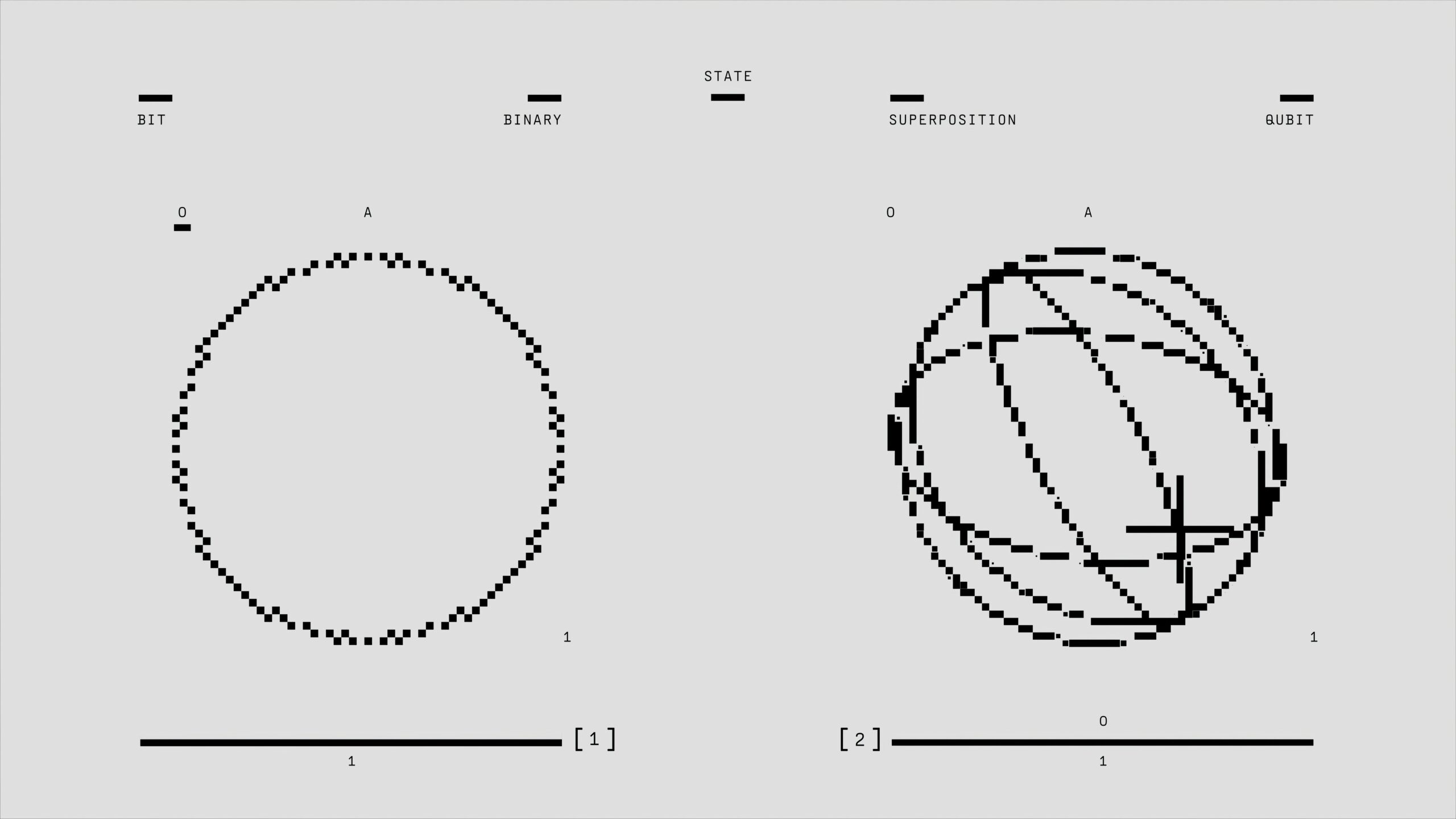

Concept drift represents a more subtle and potentially dangerous phenomenon. Here, the relationship between your input features and target variable changes over time. The inputs might look similar, but they predict different outcomes than they used to.

Consider a spam detection model. The words and patterns that indicated spam in 2015 might appear in legitimate emails today. Spammers continuously adapt their tactics, changing the fundamental relationship between email characteristics and spam probability. Your features haven’t necessarily changed distribution, but their predictive power has evolved.

This type of drift directly impacts your model’s ability to make accurate predictions. Even if your input data distribution remains stable, concept drift erodes model performance because the learned patterns no longer reflect reality.

Types of Concept Drift Patterns

Sudden concept drift happens when relationships change abruptly. A pandemic declaration, major policy change, or market crash can instantly alter how features relate to outcomes. Your model’s accuracy drops sharply and noticeably within a short timeframe.

Gradual concept drift unfolds slowly over extended periods. Consumer preferences evolve, technologies mature, and behavioral patterns shift incrementally. This drift proves harder to detect because performance degradation happens so gradually that it might go unnoticed until significant damage accumulates.

Recurring concept drift follows cyclical patterns. Seasonal businesses experience this regularly—the factors predicting summer sales differ from winter sales predictors, yet these patterns repeat annually. Models must adapt to recognize and accommodate these recurring shifts.

Identifying Concept Drift Signals

Performance metrics provide the most direct evidence of concept drift. When accuracy, precision, recall, or other relevant metrics decline while input distributions remain stable, concept drift is the likely culprit. Regular model evaluation on recent data samples reveals these trends.

Prediction confidence scores can signal concept drift before performance metrics degrade significantly. If your model becomes increasingly uncertain about its predictions (lower confidence scores) despite similar input distributions, the underlying concepts may be shifting.

Residual analysis helps detect subtle concept drift. Plotting prediction errors over time can reveal systematic patterns indicating that your model’s understanding of the relationship between features and targets no longer aligns with reality.

⚖️ The Practical Differences That Matter

Understanding the distinction between these drift types determines your response strategy. Data drift suggests your model might still be valid but needs recalibration for the new input space. Concept drift indicates your model’s fundamental assumptions about the world are outdated.

Response urgency differs significantly. Data drift often allows for planned, scheduled retraining cycles. You can implement gradual adjustments and test thoroughly before deployment. Concept drift, especially sudden variants, may require immediate intervention to prevent serious prediction failures.

Diagnostic Approach Comparison

Diagnosing data drift focuses on input feature analysis. You examine feature distributions, calculate statistical differences from baseline, and monitor feature correlations. The investigation centers on understanding how your X variables have changed.

Diagnosing concept drift requires analyzing the X-Y relationship. You evaluate model performance on recent data, examine prediction errors for patterns, and assess whether feature importance has shifted. The investigation focuses on whether your learned function remains valid.

Here’s a practical diagnostic framework:

- First, check model performance metrics—declining accuracy suggests some form of drift

- Second, analyze input feature distributions against training baselines

- Third, if features have shifted but performance remains stable, monitor closely but don’t panic

- Fourth, if features are stable but performance declined, suspect concept drift

- Finally, if both features and performance shifted, you’re likely dealing with both drift types simultaneously

🛠️ Strategic Response Frameworks

Responding to data drift involves several strategies depending on severity. Minor shifts might not require immediate action—your model may generalize adequately to the new input space. Moderate drift suggests scheduled retraining with recent data that reflects the new distribution.

Severe data drift may require feature engineering adjustments. If key features have fundamentally changed, you might need to create new features that remain stable or develop normalization strategies that account for distribution shifts.

Tackling Concept Drift Effectively

Concept drift demands more aggressive responses. Complete model retraining becomes necessary when relationships have fundamentally changed. This retraining should prioritize recent data that reflects current concepts while potentially downweighting or excluding outdated historical data.

Ensemble approaches can mitigate concept drift impact. Maintaining multiple models trained on different time periods allows your system to weight predictions based on which model best understands current patterns. This approach provides resilience against sudden shifts.

Online learning strategies prove particularly effective for recurring or gradual concept drift. These approaches continuously update model parameters as new data arrives, allowing the model to adapt to changing relationships without complete retraining cycles.

📊 Building Robust Monitoring Systems

Effective drift management requires comprehensive monitoring infrastructure. This system should track both input distributions and model performance continuously, providing early warning before drift causes significant business impact.

Feature monitoring dashboards should display distribution statistics for all critical features. Tracking means, standard deviations, percentiles, and distribution shapes over rolling time windows helps teams spot emerging trends before they become problems.

Performance dashboards need real-time or near-real-time updates. Tracking accuracy, precision, recall, and domain-specific metrics on recent predictions reveals concept drift quickly. Segmenting these metrics by important dimensions (geography, customer segment, product category) provides deeper insights.

Alert Strategy Design

Effective alerting balances sensitivity with alert fatigue. Setting thresholds too aggressively floods teams with false alarms, while too-relaxed thresholds allow significant drift to go unnoticed. Multi-level alerting systems work well—information alerts for minor deviations, warnings for moderate drift, and critical alerts for severe changes.

Contextual alerts prove more actionable than simple threshold-based systems. An alert that says “customer age distribution PSI exceeded 0.25” provides more value than “data drift detected,” enabling faster diagnosis and response.

🚀 Proactive Architecture Patterns

Building drift-resilient ML systems starts with architectural decisions. Champion-challenger frameworks allow you to test retrained models against production models continuously, switching when the challenger demonstrates superior performance on recent data.

Shadow mode deployments let new models run alongside production systems without affecting business outcomes. This approach provides real-world performance data before committing to model updates, reducing risk when addressing suspected drift.

Automated retraining pipelines enable rapid response to confirmed drift. These systems should handle data collection, preprocessing, training, validation, and deployment with minimal manual intervention, reducing the time between drift detection and remediation.

Feature Store Benefits

Feature stores provide centralized feature management that simplifies drift monitoring. By serving as the single source of truth for feature values, they enable consistent comparison between training and serving distributions. Built-in monitoring capabilities can track feature statistics automatically.

Version control for features becomes crucial when addressing drift. Understanding which feature definitions were used during training versus serving helps diagnose unexpected distribution shifts caused by feature engineering bugs rather than genuine environmental changes.

💡 Learning From Real-World Scenarios

E-commerce recommendation systems frequently encounter both drift types. During the pandemic, purchasing patterns shifted dramatically—people bought different products (data drift) and the features predicting purchase intent changed (concept drift). Companies that quickly adapted their models maintained revenue while others struggled.

Financial fraud detection faces constant concept drift as fraudsters evolve tactics. Transaction patterns that once indicated fraud become common legitimate behavior, while new fraud patterns emerge. Successful systems implement continuous learning approaches that adapt to these shifting concepts.

Healthcare predictive models experience data drift when patient demographics shift or when hospitals adopt new diagnostic equipment. The key features remain predictive, but their distributions change. Regular recalibration maintains accuracy without complete model redesign.

🎓 Building Team Capabilities

Addressing drift effectively requires cross-functional collaboration. Data scientists must understand business context to distinguish meaningful drift from acceptable variance. Domain experts need sufficient ML literacy to contribute to drift diagnosis and response planning.

Establishing clear ownership prevents drift from falling through organizational cracks. Assigning specific teams or individuals responsibility for monitoring production models ensures someone watches for drift signals and coordinates responses when detected.

Documentation practices matter enormously. Recording baseline distributions, model assumptions, known drift patterns, and response histories creates institutional knowledge that accelerates future drift management. This documentation helps new team members understand model behavior and historical challenges.

🔮 Preparing for Future Challenges

The ML landscape continues evolving, bringing new drift challenges. As models become more complex and data sources multiply, distinguishing between drift types and their causes becomes more sophisticated. Investing in robust monitoring infrastructure and team capabilities today prepares organizations for tomorrow’s challenges.

Automated drift response systems represent the next frontier. Machine learning systems that detect drift, diagnose its type, implement appropriate responses, and validate outcomes with minimal human intervention will become increasingly viable and necessary as model portfolios grow.

Understanding the practical differences between concept drift and data drift empowers teams to maintain reliable ML systems in dynamic environments. These phenomena aren’t obstacles to avoid but natural aspects of production ML that well-prepared teams can manage effectively. By implementing comprehensive monitoring, building appropriate response capabilities, and fostering cross-functional collaboration, organizations can navigate these waters successfully and maintain the business value their ML investments promise to deliver.

Toni Santos is a technical researcher and ethical AI systems specialist focusing on algorithm integrity monitoring, compliance architecture for regulatory environments, and the design of governance frameworks that make artificial intelligence accessible and accountable for small businesses. Through an interdisciplinary and operationally-focused lens, Toni investigates how organizations can embed transparency, fairness, and auditability into AI systems — across sectors, scales, and deployment contexts. His work is grounded in a commitment to AI not only as technology, but as infrastructure requiring ethical oversight. From algorithm health checking to compliance-layer mapping and transparency protocol design, Toni develops the diagnostic and structural tools through which organizations maintain their relationship with responsible AI deployment. With a background in technical governance and AI policy frameworks, Toni blends systems analysis with regulatory research to reveal how AI can be used to uphold integrity, ensure accountability, and operationalize ethical principles. As the creative mind behind melvoryn.com, Toni curates diagnostic frameworks, compliance-ready templates, and transparency interpretations that bridge the gap between small business capacity, regulatory expectations, and trustworthy AI. His work is a tribute to: The operational rigor of Algorithm Health Checking Practices The structural clarity of Compliance-Layer Mapping and Documentation The governance potential of Ethical AI for Small Businesses The principled architecture of Transparency Protocol Design and Audit Whether you're a small business owner, compliance officer, or curious builder of responsible AI systems, Toni invites you to explore the practical foundations of ethical governance — one algorithm, one protocol, one decision at a time.