Generative AI is revolutionizing how we create content, analyze data, and interact with technology, but its true power lies in transparent, ethical implementation.

The rapid advancement of artificial intelligence has brought us to a pivotal moment where generative models can produce text, images, code, and multimedia content with unprecedented sophistication. However, as these technologies become more integrated into our daily workflows and business operations, the need for clear transparency protocols has never been more critical. Organizations and individuals alike must navigate an increasingly complex landscape where innovation meets accountability.

Understanding how to harness generative AI while maintaining ethical standards and transparent practices isn’t just a technical challenge—it’s a fundamental requirement for sustainable growth in the digital age. This article explores the essential frameworks, methodologies, and best practices for implementing transparency protocols that unlock the full potential of cutting-edge generative AI systems.

🔍 The Foundation of AI Transparency: Why It Matters

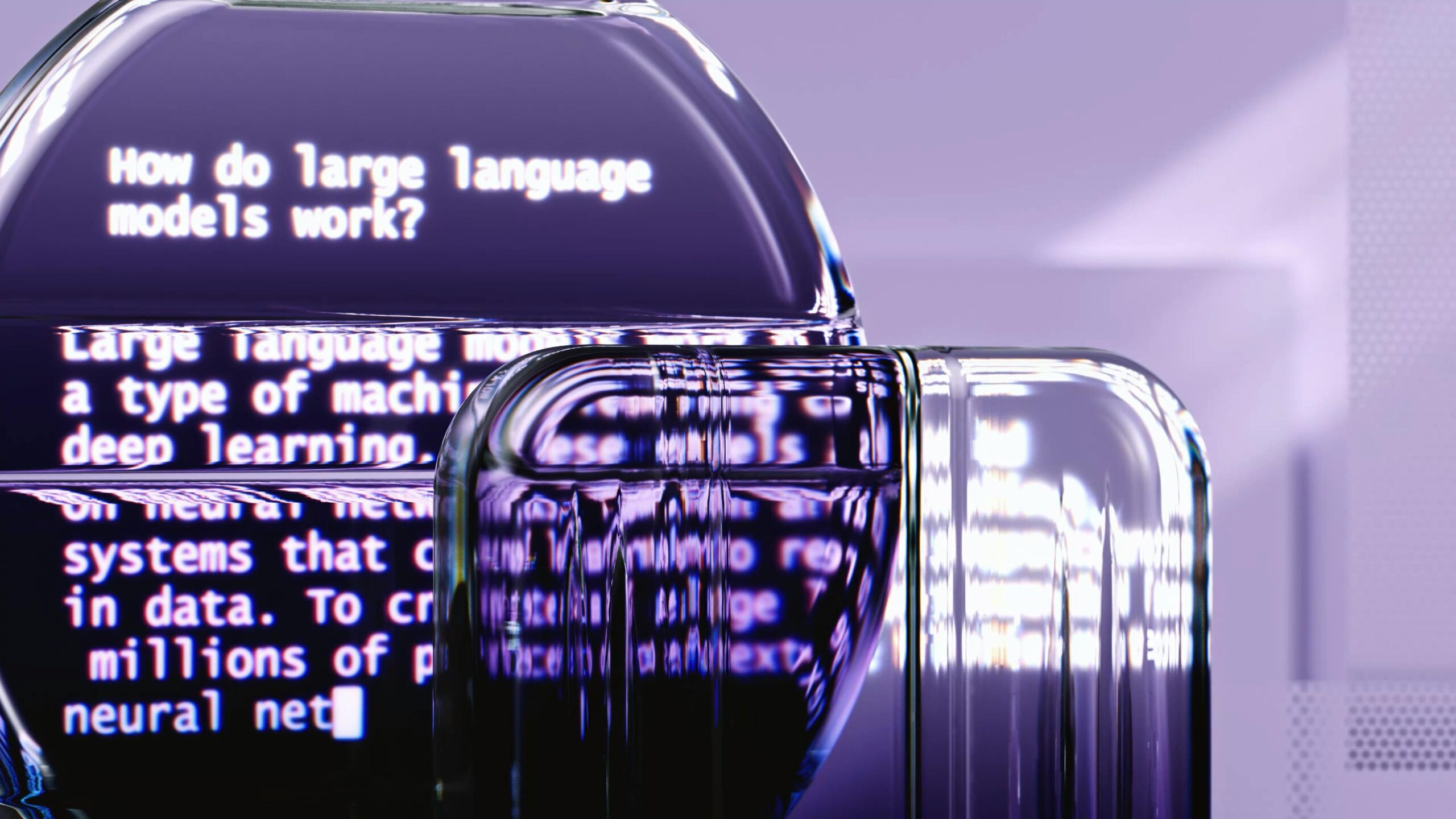

Transparency in generative AI refers to the ability to understand, explain, and audit how these systems make decisions and produce outputs. Unlike traditional software where input-output relationships are deterministic and traceable, generative AI models operate through complex neural networks with billions of parameters, making their decision-making processes inherently opaque.

This opacity creates significant challenges across multiple dimensions. Users need to trust AI-generated content, businesses must comply with regulatory requirements, and society demands accountability when AI systems impact critical decisions. Without proper transparency protocols, even the most sophisticated generative AI implementations can face rejection, legal challenges, or public backlash.

The stakes are particularly high in sectors like healthcare, finance, legal services, and education, where AI-generated insights directly influence outcomes affecting human lives. A medical diagnosis assisted by AI, financial advice generated by algorithms, or educational content created by language models all require verifiable transparency to ensure reliability and safety.

Building Blocks of Effective Transparency Protocols 🧱

Implementing transparency in generative AI systems requires a multi-layered approach that addresses technical, organizational, and ethical dimensions. The following components form the cornerstone of comprehensive transparency protocols.

Model Documentation and Provenance

Every generative AI system should maintain detailed documentation covering its architecture, training data sources, known limitations, and intended use cases. This documentation serves as the foundation for all transparency efforts, enabling stakeholders to understand the system’s capabilities and constraints.

Training data provenance is particularly crucial. Organizations must track where training data originated, how it was collected, whether appropriate consent was obtained, and what biases might be embedded within it. This level of documentation allows for better assessment of potential risks and limitations in the model’s outputs.

Output Attribution and Watermarking

As generative AI produces increasingly realistic content, distinguishing between human-created and AI-generated material becomes challenging. Implementing robust attribution mechanisms helps maintain trust and prevents misuse. Digital watermarking technologies embedded within AI outputs can provide verifiable proof of their synthetic origin without compromising the content’s utility.

Several organizations are developing standardized watermarking protocols that persist through various transformations and edits. These techniques range from imperceptible patterns in images to statistical signatures in text that can be detected by specialized tools.

🎯 Implementing Real-World Transparency Frameworks

Moving from theory to practice requires concrete frameworks that organizations can adopt and customize to their specific contexts. Several industry-leading approaches have emerged as effective models for implementing transparency protocols.

The Tiered Disclosure Approach

Not all stakeholders require the same level of technical detail about generative AI systems. A tiered disclosure framework provides different levels of information based on the audience’s needs and technical expertise. End-users might receive simple, accessible explanations of AI involvement, while technical auditors access comprehensive system documentation, and regulators review compliance-specific information.

This approach balances the need for transparency with practical considerations around proprietary information, user experience, and information overload. Organizations can maintain competitive advantages while still meeting ethical and regulatory transparency requirements.

Continuous Monitoring and Reporting

Transparency isn’t a one-time implementation but an ongoing commitment. Establishing continuous monitoring systems that track model performance, output quality, bias metrics, and user feedback creates a dynamic transparency ecosystem. Regular reporting mechanisms ensure stakeholders remain informed about system behavior and any emerging issues.

These monitoring systems should capture both quantitative metrics (accuracy rates, processing times, resource consumption) and qualitative assessments (user satisfaction, ethical concerns, unexpected behaviors). The data collected feeds into regular transparency reports shared with relevant stakeholders.

Navigating Regulatory Landscapes and Compliance 📋

The regulatory environment surrounding generative AI is evolving rapidly, with jurisdictions worldwide implementing new requirements for transparency and accountability. Organizations must stay ahead of these developments to ensure compliance while maintaining operational flexibility.

The European Union’s AI Act represents one of the most comprehensive regulatory frameworks, establishing risk-based requirements for AI systems. High-risk applications face stringent transparency obligations, including human oversight requirements, technical documentation, and conformity assessments. Generative AI systems that interact with users or produce content influencing decision-making often fall into categories requiring enhanced transparency.

In the United States, sector-specific regulations are emerging across industries. The Federal Trade Commission has issued guidance on AI and algorithms, emphasizing truth in advertising and fairness. Healthcare applications must comply with HIPAA requirements, while financial services face scrutiny under existing consumer protection laws.

Building Compliance-Ready Systems

Designing generative AI systems with compliance in mind from the outset proves far more effective than retrofitting transparency features later. Key considerations include:

- Implementing comprehensive logging systems that capture input data, processing steps, and output generation

- Creating explainability interfaces that provide human-readable justifications for AI-generated outputs

- Establishing clear data governance policies covering training data, user inputs, and generated content

- Developing incident response protocols for addressing transparency failures or unexpected behaviors

- Maintaining version control and change management systems for AI models and associated documentation

🤝 Stakeholder Engagement and Communication Strategies

Effective transparency extends beyond technical implementation to encompass how organizations communicate with various stakeholders about their generative AI systems. Different audiences require tailored communication approaches that address their specific concerns and information needs.

User-Facing Transparency

End-users interacting with generative AI systems deserve clear, accessible information about when and how AI is involved in their experience. This transparency should be contextual, providing relevant information at appropriate moments without overwhelming users with technical details.

Simple visual indicators, such as icons or labels identifying AI-generated content, help users make informed decisions about how they engage with the material. More detailed explanations should be readily available for users who want to understand more about the system’s operation.

Internal Organizational Transparency

Within organizations, different teams need varying levels of access to AI system information. Developers require technical documentation, business leaders need strategic insights about capabilities and limitations, and compliance teams must access audit trails and risk assessments.

Creating internal knowledge bases, conducting regular training sessions, and establishing cross-functional AI governance committees ensures organizational alignment on transparency practices and responsibilities.

Technical Tools and Methodologies for Enhanced Transparency 🔧

The rapidly growing field of explainable AI (XAI) provides numerous technical tools and methodologies for implementing transparency in generative systems. These approaches range from model-agnostic techniques to architecture-specific solutions designed for particular types of generative models.

Attention Visualization and Interpretation

For transformer-based generative models, attention mechanisms provide valuable insights into which input elements influenced specific output tokens. Visualizing attention patterns helps explain why a model generated particular content, revealing the relationships it identified within the input data.

These visualizations can be simplified for non-technical audiences, showing which parts of a prompt most strongly influenced the generated output, or maintained in technical detail for researchers and auditors conducting detailed analyses.

Counterfactual Explanations

Counterfactual reasoning asks “what would need to change about the input for the output to be different?” This approach provides intuitive explanations by showing users how modifications to their prompts or inputs would alter the generated results. For creative applications, counterfactuals help users understand the parameter space and refine their requests more effectively.

⚖️ Balancing Innovation with Responsibility

One of the central tensions in implementing transparency protocols involves maintaining competitive innovation while meeting ethical and regulatory obligations. Organizations often worry that excessive transparency might reveal proprietary techniques or limit their ability to rapidly iterate on AI systems.

However, research and practical experience demonstrate that transparency and innovation are not mutually exclusive. In fact, robust transparency protocols can accelerate innovation by building trust, enabling broader adoption, attracting talent, and reducing regulatory friction.

The key lies in strategic transparency—being thoughtful about what information is shared, with whom, and through what channels. Core algorithmic innovations can often remain proprietary while still providing sufficient transparency about system behavior, limitations, and data practices to satisfy ethical and regulatory requirements.

Open Source Contributions and Community Standards

The generative AI community has increasingly embraced open source development and collaborative standard-setting. Projects like Hugging Face’s model cards, Google’s Model Card Toolkit, and various industry consortia working on AI transparency standards demonstrate how collective action can raise transparency baselines across the field.

Organizations can contribute to and benefit from these community efforts, leveraging shared tools and frameworks while maintaining competitive differentiation in their specific applications and implementations.

Measuring and Improving Transparency Over Time 📊

Effective transparency protocols require ongoing measurement and continuous improvement. Establishing clear metrics for transparency helps organizations assess their current state and identify areas for enhancement.

Key performance indicators might include user comprehension rates (how well users understand when and how AI is involved), audit success rates (ability to trace and explain specific outputs), compliance coverage (percentage of regulatory requirements met), and stakeholder satisfaction with transparency communications.

Regular transparency audits, conducted internally or by third parties, provide objective assessments of how well transparency protocols are functioning in practice. These audits should examine both technical implementation and organizational practices, identifying gaps and recommending improvements.

🚀 Future Horizons: Emerging Transparency Technologies

The field of AI transparency continues to evolve rapidly, with new technologies and approaches constantly emerging. Staying informed about these developments helps organizations anticipate future requirements and opportunities.

Federated learning and privacy-preserving machine learning techniques are enabling new forms of transparency that protect sensitive data while still providing audibility. Blockchain-based provenance tracking offers immutable records of AI system development and deployment. Advanced explainability techniques are making increasingly sophisticated models more interpretable without sacrificing performance.

Standardized transparency APIs and interoperability protocols are emerging, making it easier for organizations to implement consistent transparency practices across diverse AI systems and platforms. These standards will likely become increasingly important as regulatory requirements mature and stakeholder expectations rise.

Creating a Culture of Transparency Excellence 🌟

Ultimately, implementing effective transparency protocols for generative AI requires more than technical solutions and procedural checklists. It demands cultivating an organizational culture that values transparency as a core principle rather than viewing it as a compliance burden.

Leadership commitment is essential. When executives and decision-makers consistently prioritize transparency, allocate resources to transparency initiatives, and model transparent communication practices, these values permeate throughout the organization. Employees at all levels feel empowered to raise concerns, ask questions, and contribute to transparency improvements.

Training programs should educate team members about both the why and how of AI transparency. Technical staff need tools and methodologies for implementing transparency features, while non-technical employees need sufficient understanding to communicate effectively with users and stakeholders about AI systems.

Incentive structures should reward transparency excellence rather than penalizing disclosure of limitations or issues. Creating psychological safety around acknowledging AI system constraints encourages honest assessment and continuous improvement rather than defensive concealment.

Practical Roadmap for Implementation Success 🗺️

For organizations beginning their transparency journey or seeking to enhance existing practices, a structured implementation roadmap provides valuable guidance. Start by conducting a comprehensive inventory of all generative AI systems currently in use or under development, assessing each for transparency gaps and risks.

Prioritize systems based on risk level, user exposure, and regulatory requirements. High-risk applications affecting critical decisions or serving vulnerable populations should receive immediate attention. Develop system-specific transparency plans that address documentation, monitoring, user communication, and compliance requirements.

Establish governance structures including clear roles and responsibilities for transparency implementation and oversight. Cross-functional teams typically work best, bringing together technical expertise, legal knowledge, ethical reasoning, and business perspective.

Begin with achievable quick wins that demonstrate value and build momentum. Simple improvements like clearer user notifications about AI involvement or basic model documentation can show progress while more comprehensive initiatives develop. Iterate based on feedback and lessons learned, continuously refining approaches as you gain experience.

The journey toward comprehensive transparency in generative AI is ongoing and challenging, but absolutely essential. As these powerful technologies become increasingly integrated into our digital infrastructure, transparent implementation practices will separate sustainable, trusted AI systems from those that fail to gain acceptance. Organizations that proactively embrace transparency protocols position themselves as leaders in the responsible AI movement, building trust with users, satisfying regulators, and unlocking the full transformative potential of generative AI technologies. The path forward requires commitment, collaboration, and continuous learning, but the rewards—both ethical and practical—make this investment worthwhile for any organization serious about leveraging AI responsibly and effectively.

Toni Santos is a technical researcher and ethical AI systems specialist focusing on algorithm integrity monitoring, compliance architecture for regulatory environments, and the design of governance frameworks that make artificial intelligence accessible and accountable for small businesses. Through an interdisciplinary and operationally-focused lens, Toni investigates how organizations can embed transparency, fairness, and auditability into AI systems — across sectors, scales, and deployment contexts. His work is grounded in a commitment to AI not only as technology, but as infrastructure requiring ethical oversight. From algorithm health checking to compliance-layer mapping and transparency protocol design, Toni develops the diagnostic and structural tools through which organizations maintain their relationship with responsible AI deployment. With a background in technical governance and AI policy frameworks, Toni blends systems analysis with regulatory research to reveal how AI can be used to uphold integrity, ensure accountability, and operationalize ethical principles. As the creative mind behind melvoryn.com, Toni curates diagnostic frameworks, compliance-ready templates, and transparency interpretations that bridge the gap between small business capacity, regulatory expectations, and trustworthy AI. His work is a tribute to: The operational rigor of Algorithm Health Checking Practices The structural clarity of Compliance-Layer Mapping and Documentation The governance potential of Ethical AI for Small Businesses The principled architecture of Transparency Protocol Design and Audit Whether you're a small business owner, compliance officer, or curious builder of responsible AI systems, Toni invites you to explore the practical foundations of ethical governance — one algorithm, one protocol, one decision at a time.